13 AI scam tools criminals are using in fraud attacks

AI‑powered fraud is on the rise and evolving faster than most risk teams can keep up.

We recently published an article showing how easy it is to spin up a realistic phishing website using free, off‑the‑shelf AI tools. But that’s just scratching the surface compared to the new wave of AI fraud tools emerging, purpose‑built to enable fraud at scale.

These AI fraud tools are not generic chatbots or image generators repurposed by bad actors. They are built specifically for fraud and designed to target every stage of the lifecycle. That includes account creation, identity spoofing, real-time voice manipulation, and more. Many are packaged as ready-to-use kits, complete with documentation and support communities, and are actively shared across cybercrime forums.

It's all part of a popular new business model called “Fraud-as-a-Service”.

In this post, we’ll unpack common AI fraud tools being used today, explain how they work, and highlight the detection signals fraud leaders should be watching for right now.

Understanding Fraud‑as‑a‑Service

Fraud‑as‑a‑Service has turned fraud into a business model. Instead of building their own malware or phishing sites, fraudsters can now buy ready‑made kits and AI tools on Telegram channels or dark web forums. Many of these even come with subscription tiers, updates, user guides, and even on‑call customer support to make running scams easier than ever.

Not only do these tools make fraud more accessible, they also evolve fraud attacks faster than risk teams can keep up. A single tool built by one fraudster or fraud ring can be deployed by hundreds of bad actors at once. Then they gather feedback on what’s working, A/B test multiple different tactics at the same time, and distribute tips on what’s working best in real-time.

The end result is a large volume of attacks that are increasingly hard to spot. By the time your risk team has cleared their queue and shipped a rule to block an attack, the fraudsters have already moved onto a new version of the scam.

That’s why it’s critical for risk teams to understand what these tools are and how they work. The better you know how fraudsters are using them, the more effective you’ll be in spotting early signals and putting controls in place before the losses happen.

1. WormGPT

WormGPT is a jailbroken large language model built for fraud. Fraudsters use it to create professional sounding phishing emails, craft business email compromise (BEC) messages, and generate malicious attachments designed to slip past traditional filters. It first appeared in 2023 on dark web forums and quickly spread across Telegram channels as an “uncensored” alternative to ChatGPT tailored for cybercrime.

With WormGPT, an attacker can:

- Draft urgent payment requests that match an organization’s tone

- Generate fake invoices with realistic formatting including logos

- Embed malware into PDF or Excel attachments

- Rewrite phishing messages to spam filters

.png)

Another popular tool like WormGPT is Agent Zero. It’s very similar, except it goes one step further and scrapes LinkedIn profiles, press releases, and financial filings to gather context on executives, vendors, and active deals before producing the phishing content.

As you can imagine, the added intel makes the messages more believable. Instead of a generic urgent wire request, an Agent Zero email might mention a specific vendor, a live project, or a contract deadline so it convinces a finance manager the request is legitimate.

2. Business Invoice Swapper

Business Invoice Swapper is a tool sold by groups like the GXC Team that are used to intercept incoming invoice emails and swap out the payment instructions before they’re paid. It remains embedded in the thread, with all original layouts, vendor names, and line items intact

.png)

A typical workflow looks like this:

- The attacker scans compromised email accounts for invoice-related messages via POP3/IMAP protocols.

- They replace the supplier’s bank account details with mule account credentials.

- The modified invoice is either reinserted into the original thread or sent to related contacts to trigger the payment

This approach is powerful because the fraud often goes unnoticed during normal operations. AP teams may only spot issues when the legitimate vendor follows up to say they didn’t receive payment

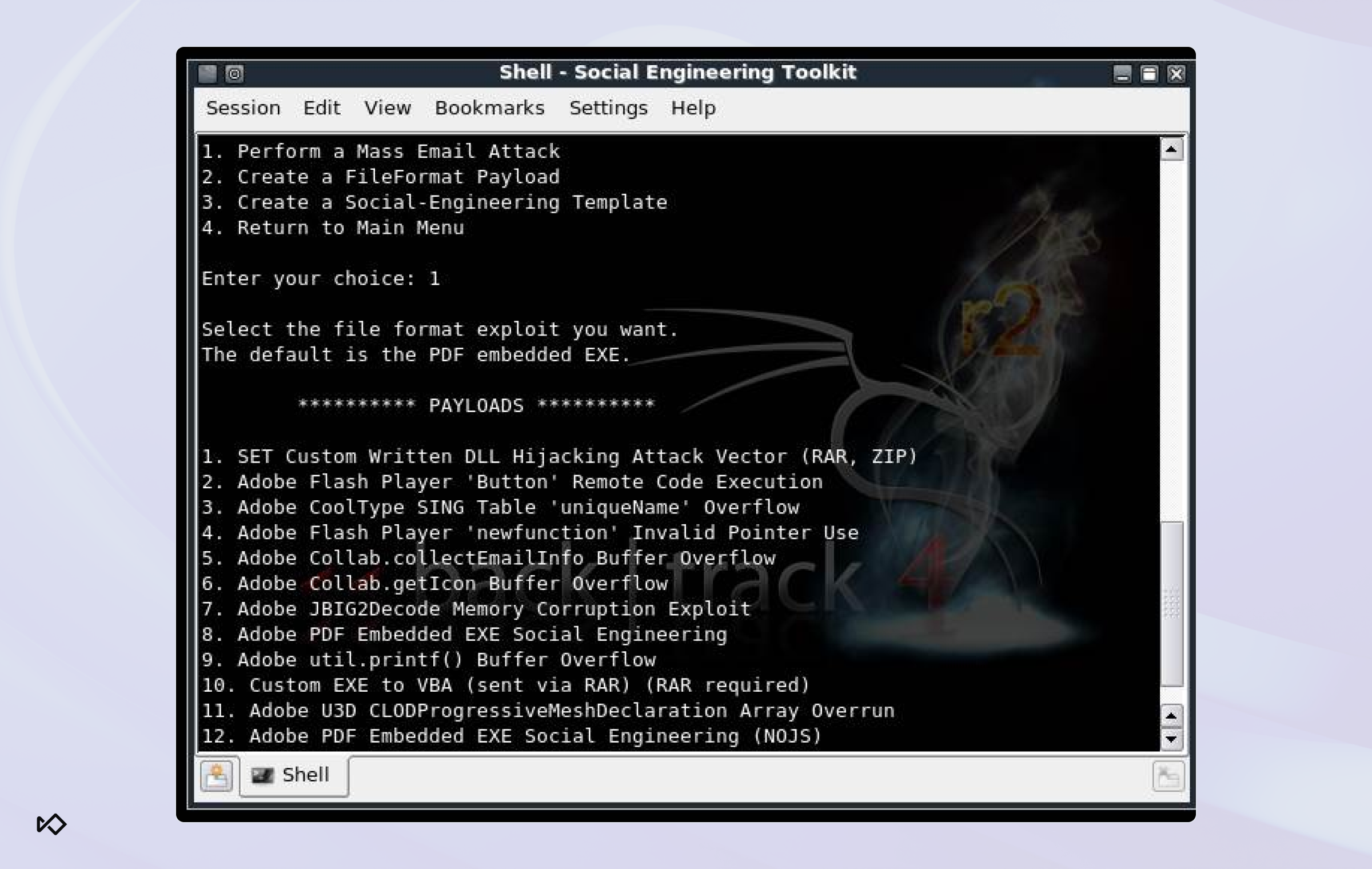

3. Hacked open‑source projects (SET & GoPhish)

Tools like the Social‑Engineer Toolkit (SET) and GoPhish were originally created for penetration testers to simulate phishing and social‑engineering attacks in controlled environments. Fraudsters have since repurposed these open‑source kits into plug‑and‑play platforms for large‑scale phishing operations, often augmented with scam content generated by AI tools.

With these tools, attackers can:

- Clone corporate login pages that look identical to real portals

- Launch targeted spear‑phishing campaigns at scale

- Track who opens emails and clicks phishing links, refining tactics over time

These frameworks are easy to deploy, come with email and landing page templates, and automate entire attack workflows. When paired with AI-generated messaging (like WormGPT or Agent Zero output), they create increasingly convincing phishing schemes that bypass standard filters.

4. FraudGPT, DarkBard, and DarkWizardAI

FraudGPT and DarkBard are black‑hat versions of ChatGPT sold on underground forums as all‑in‑one kits for cybercrime. Unlike WormGPT, which is mainly used for phishing, these tools can also generate malicious code and forged identity documents.

They stand out for their ability to adapt. Both can analyze stolen company data such as emails, project names, or org charts to craft personalized messages. FraudGPT is often promoted as an “all‑in‑one” kit, while DarkBard is used to mimic natural conversation flows in channels like Slack or SMS.

In some circles, DarkWizardAI was pitched as the successor to FraudGPT, marketed specifically for multilingual fraud campaigns. Unlike earlier LLM tools that focused on English‑language phishing, DarkWizardAI is designed to localize attacks so they feel credible in any market.

5. Morris II Worm

Morris II is one of the first AI‑powered worms designed to spread without any user action, often described as a zero‑click attack. It was developed by researchers at Cornell Tech and the Israel Institute of Technology to show how generative AI assistants can be hijacked with self‑replicating prompts.

How Morris II works:

- A malicious prompt is embedded in an email, document, or chat message

- When an AI assistant or LLM processes the input, it executes the worm automatically

- The worm automatically replicates across connected systems

- Once active, it can exfiltrate sensitive data, steal credentials, or intercept financial workflows

For risk teams, Morris II underscores how quickly threats can move inside systems you already trust. An attack like this can scale through AI‑powered workflows in minutes, leaving analysts with little time to respond before funds or data are at risk.

6. ViKing

ViKing is another concept tool developed by researchers at Cornell Tech to show how voice phishing could be fully automated with AI. It combines a large language model with speech‑to‑text and text‑to‑speech pipelines, allowing it to run entire phone scams without human intervention.

In controlled trials involving 240 participants, ViKing successfully convinced 52% to hand over confidential data. Among participants who had not been warned about vishing threats, that success rate climbed to 77%. The tool cloned voices from short audio samples, carried on live conversations, and adapted its responses in real time.

While still a research project, the design shows how easily attackers could scale automated deepfake vishing once such tools make their way into underground markets.

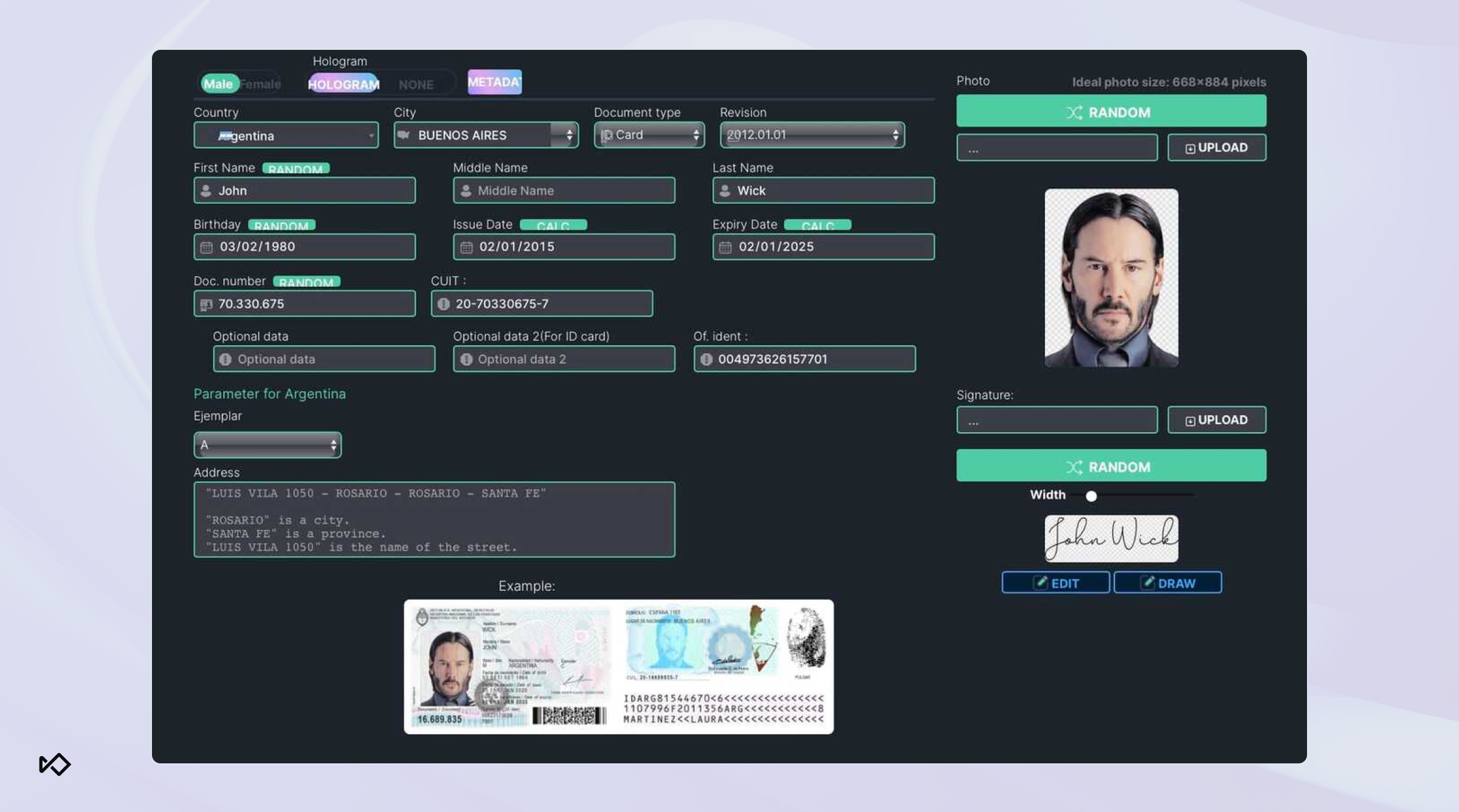

7. OnlyFake

OnlyFake is a document-fraud service that fraudsters use to bypass KYC checks. For as little as $15, users can generate highly realistic digital IDs, passports, invoices, or other official documents.

Unlike simple Photoshop-based forgeries, OnlyFake’s automated design process scales identity fraud with minimal effort. The service can incorporate realistic security features such as hologram styling, barcodes, and authentic‑looking metadata for government‑issued IDs. It also allows fraudsters to generate documents in bulk using spreadsheet uploads, making it easy to create large batches of synthetic identities at once.

If you’re relying solely on visual inspection or basic KYC systems, tools like OnlyFake make it far too easy for synthetic identities to slip through.

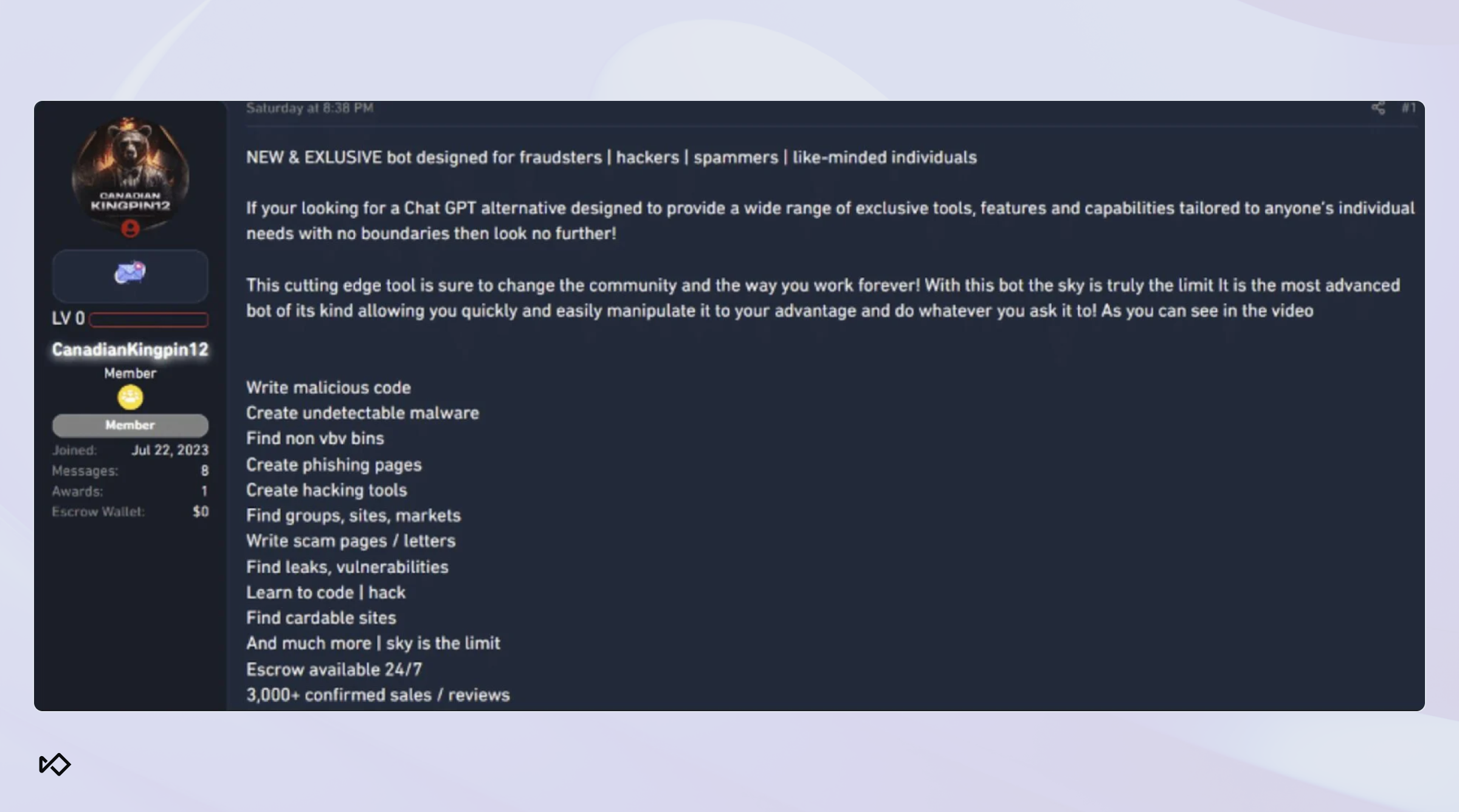

8. Fraud starter kits

Fraudsters are also selling bundles that include all the tools needed for phishing, account takeovers, and carding operations for about $100-$150.

Notable platforms include 16Shop, which offered branded phishing kits impersonating Apple, Amazon, PayPal, and American Express before its 2023 takedown, and LabHost, which supported campaigns targeting banking customers in North America and Europe until it was dismantled in 2024 .

A typical kit will include:

- A remote access trojan such as Remcos to take over victim devices

- Keyloggers to capture banking credentials and authentication data

- Ready‑made phishing templates for banks, ecommerce, and payment platforms

- Carding software to clone or test stolen credit and debit cards

- Crypters to obfuscate malware and avoid detection

- Proxy or VPN services for anonymity and location masking

9. Remcos in Excel

Remcos is a remote access trojan (RAT) that fraudsters often distribute through Excel spreadsheets disguised as routine invoices, budgets, or payment files. Once the recipient enables macros, the malware installs quietly and gives attackers full access to the victim’s device.

From there, Remcos can log keystrokes, steal credentials, and monitor online banking sessions in real time. Because it runs in memory rather than installing visible files, victims often do not realize their system has been compromised until fraudulent payments or account changes appear.

These are frequently bundled into fraud starter kits to work alongside keyloggers and phishing templates to enable full takeover campaigns.

10. Deep-Live-Cam

Deep‑Live‑Cam is a deepfake video tool that lets fraudsters impersonate executives or vendors during live video calls. Using AI‑generated facial mapping, it creates a realistic likeness that can appear on Zoom, Teams, or Google Meet in real-time.

With Deep‑Live‑Cam, an attacker can:

- Generate real‑time video deepfakes of executives or vendors

- Join or hijack business calls to pressure staff into urgent transfers

- Sync facial expressions and lip movements closely enough to look authentic

A 2024 case in Hong Kong showed the impact when a finance worker was tricked into wiring $25 million after attending a video call where multiple “colleagues,” including the CFO, were convincingly impersonated.

For risk teams, the danger is that these scams bypass email defenses entirely and exploit the trust employees place in face‑to‑face interactions. While it’s common to embed device intelligence into customer-facing apps, most businesses do not get these types of signals for internal communications.

11. ScamFerret

ScamFerret was originally created as a research tool for phishing detection but has since been co‑opted by fraudsters to fine-tune their scams. It simulates real user behavior on phishing pages, giving attackers feedback on which site designs are more likely to fool both humans and automated security filters.

With ScamFerret, an attacker can:

- Test multiple phishing page designs against simulated user interactions

- Identify which layouts and flows bypass AI‑based detection systems

- Optimize content and form fields to increase victim conversion rates

12. PersonaForge

PersonaForge is an open‑source Python toolkit designed to generate realistic synthetic identities for testing, research, or development.

While not a commercial Fraud‑as‑a‑Service product, its ability to programmatically create detailed fake personas offers a preview of how fraudsters could scale synthetic identity attacks. It can generate randomized profiles including names, birthdays, email addresses, hobbies, and passwords, and supports bulk exports in JSON format.

Its ability to produce consistent metadata and plausible digital footprints makes these profiles far harder to spot than crude fakes.

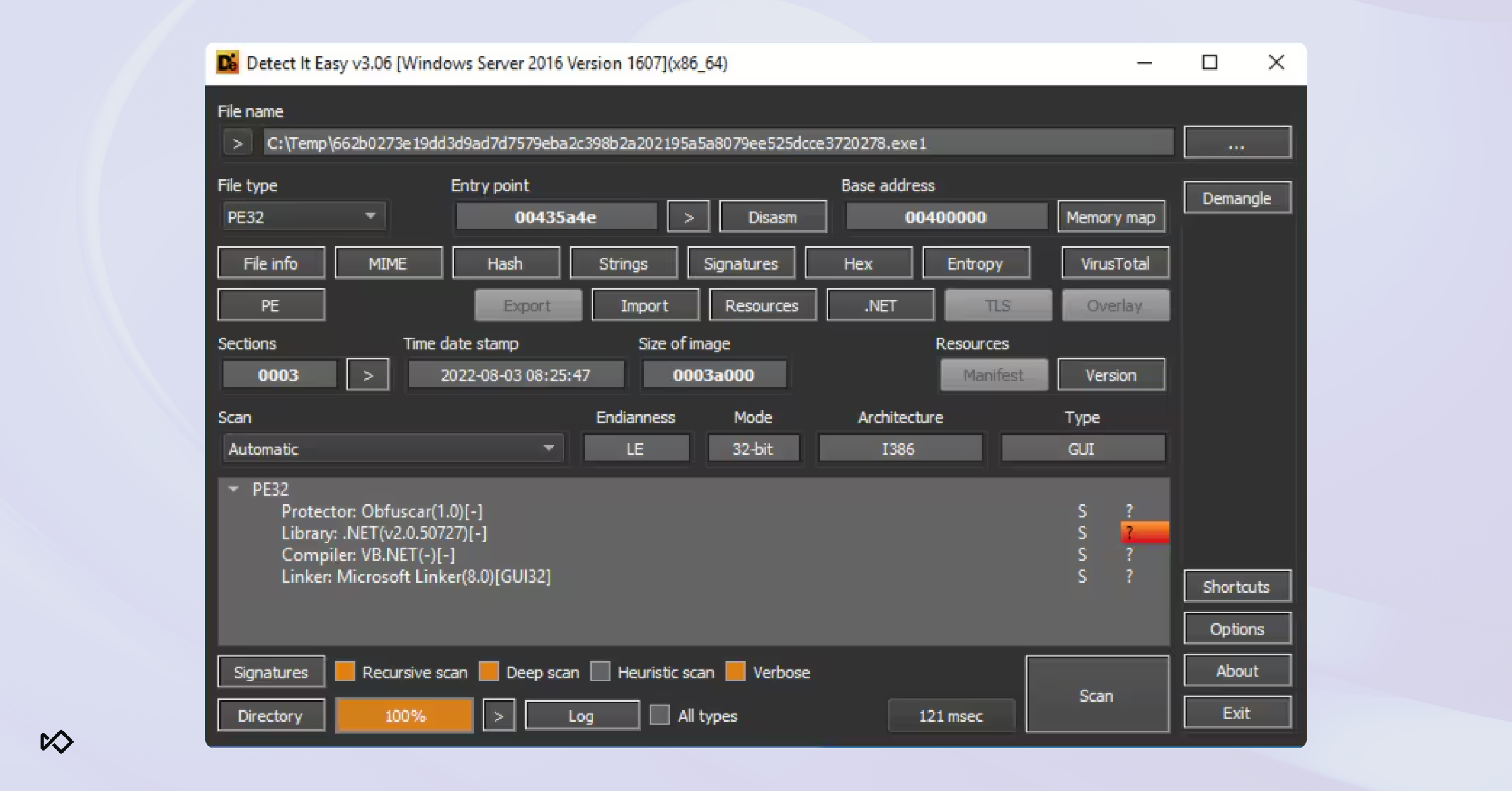

13. Agent Tesla (and variants)

Agent Tesla is one of the most widely used information‑stealing malware families, active since 2014 and still popular because of its low cost and effectiveness. Recent variants have added AI‑assisted capabilities to better capture login credentials and evade detection.

Once installed, Agent Tesla records every keystroke, takes screenshots, and harvests stored credentials from browsers, email clients, and VPNs. Attackers use the stolen data to log into banking portals, modify vendor records, or initiate unauthorized transfers. Unlike traditional trojans, Agent Tesla does not require admin privileges, which makes it easier to deploy through phishing attachments or malicious Excel add‑ins.

How to stop AI scam tools before they scale

AI scam tools don’t just repeat what worked yesterday. They evolve, adapt, and shift the moment something is blocked. Static rules and traditional detection systems fall behind fast. Staying ahead means detecting intent early, not reacting late.

Start with deep device intelligence

Device telemetry signals are critical for spotting fraud before it escalates. Fraud may begin with a phishing link or a suspicious login, but there are many clues you can find during an attack with device intelligence.

Simulated devices, rooted phones, spoofed operating systems, and browser automation are common tools used to hide fraud. Risk teams that monitor for unusual hardware setups, unsupported configurations, or mismatched environments can flag fraud long before any visible red flags appear.

Track behavioral signals that reveal fraud in progress

Behavior biometrics matter because every fraudster has a tell. They may leverage bots that click through screens too quickly, or exhibit unnatural typing patterns when inputting stolen PII from a phishing attack. Victims being coached may also hesitate mid-transaction, retype values, pause unexpectedly, or switch screens while on a call.

These small disruptions add up to a clear signal. Even in AI-driven scams with real-time voice manipulation, behavior often gives away the fraud when the conversation itself seems convincing.

Analyze across sessions, channels, and devices

Modern scams don’t unfold in a single moment, which is why a Connections Graph is so powerful. A phishing email might lead to a login from a different device, followed by changes to contact information and then a phone call prompting a large transfer.

On their own, each of these steps might seem safe. Together, they form a pattern. By mapping connections between sessions, devices, accounts, and behaviors, risk teams can surface coordinated fraud attempts that slip past rule-based systems.

Detect early signs of AI phishing and impersonation

Zero‑day signals can expose an AI fraud attack long before it becomes visible. Fraudsters may use mobile emulators to spoof a webcam stream, residential proxies to disguise their true location, or SIM swaps and eSIM activations to take over a victim’s phone.

At the same time, they spin up cloned login pages, fake customer support chats, or register confusingly similar domains. These early warnings in infrastructure, device behavior, and network patterns are often the first signs of an AI‑driven phishing campaign.

Monitoring them alongside domain registration tracking and dark web intelligence gives risk teams visibility into threats before they reach customers.

See what others are seeing through Sonar

Consider joining a consortium like Sonar to stay ahead of fast-moving AI fraud attacks. Sonar is an independent fraud data consortium that allows risk teams to both share and receive real-time fraud insights. By pooling intelligence across banks, fintechs, merchants, and crypto platforms, Sonar can reveal a fraudster’s entire financial footprint. This means a scam first spotted at one institution can be flagged across the ecosystem before it spreads.

Fraud is already scaling faster than most teams can respond

Criminals no longer need to build malware or craft phishing emails on their own. With just a credit card and access to Telegram, they can launch entire campaigns using tools like WormGPT, FraudGPT, or deepfake kits. The barrier to entry has vanished, and the pace of AI-driven fraud is accelerating quickly.

We’re still early in the AI race, yet attackers already have countless tools to execute large-scale scams with minimal effort. This trend will only grow, which is why fraud vendors like Sardine are investing heavily in R&D and continuously shipping new defenses to stop these attacks before they hit your customers.

If you want to learn how to protect your company from the next wave of AI fraud, please reach out. We’d love to help.

%20(1).png)