Deepfake detection: A practical guide for fraud prevention teams

If you work in fraud or compliance, you’ve probably already started seeing signs of deepfakes and synthetic documents in your queues.

AI-powered fraud is rising fast, and it’s only getting easier to pull off. New tools are constantly being released that make it simple to generate realistic faces, videos, and documents with minimal effort. What used to take technical skill can now be done with a few clicks, and that accessibility is driving more fraud attempts across every industry.

The challenge is that most existing systems weren’t built to spot this kind of fraud. And now, with new regulations emerging around AI-generated identities and synthetic media, risk teams need to be able to effectively detect and stop deepfake fraud.

This guide walks through the different types of deepfakes, how fraudsters use them, and how risk teams can detect them.

Types of deepfakes and how they’re delivered

Deepfakes are AI-generated or manipulated images and videos designed to make someone appear real or change what they look like. They fall under the broader category of synthetic media and are becoming a shared problem for both fraud and compliance teams.

Fraudsters are using them for a range of attacks, such as creating synthetic identities, spoofing their feeds during selfie liveness checks, and creating falsified evidence for disputes or insurance claims.

Let’s take a look at the different types of deepfakes.

Partial face morphing

Partial face morphing (identity cloaking) allows you to change specific parts of your face, without having to completely hide your appearance. This can be done by blending features from other people, or using models that let you adjust eye color, resize noses, alter jawlines, or make your face look thinner or wider in real-time. Because these models use very little processing power, fraudsters will use them to change their appearance during real-time video streams without any lag or obvious tells.

Fully AI-generated faces

Fully AI-generated faces are created entirely from scratch using generative AI models like DALL-E, SDXL, Midjourney, and Stable Diffusion. Attackers can easily prompt AI to generate an image of someone based on their gender, race or nationality, where they live, or even what job they have. As an example, we asked ChatGPT to generate an image of a realtor so you can see what these look like.

Beyond the publicly available AI tools, there are scam toolkits sold on the dark web that make it easy to produce hyper-realistic images and videos specifically built for fraud.

Face swapping

Face swapping replaces one person’s face with another’s, and is probably the most common type of deepfake you’ll see online today. There are many different tools that allow you to create these deepfakes by uploading an image or a video. In the GIF below, you can see one of the members of our marketing team face swapping their face with Elon Musk.

Face reenactment / Lip syncing

Face reenactment and lip syncing allow you to animate a photograph or screenshot of a real person’s face to their expressions and mouse movement match another person’s speed or actions. You may have seen a few examples of this online, such as the fake clips of Joe Rogan interviewing Steve Jobs on his podcast, or the recordings of Albert Einstein giving a lecture. In the video below, CNBC anchor Andrew Sorkin shared a clip of someone who did this to him and his co-anchor.

These deepfakes are usually delivered in a few specific ways, and understanding how they’re presented can give you clues about what signals can be used to detect them.

- Physical presentation: This is when a deepfake is shown on a physical device, such as a phone, tablet, or computer monitor during a call or an in-person verification. Fraudsters will often hold their phone up to the cam and play a video, or show you an image to prove what they’re saying is true.

- Video injection: This refers to deepfakes that are delivered through a virtual camera or browser plugin that feeds a video stream into a platform, such as a liveness check embedded in a fintech app or a conference call.

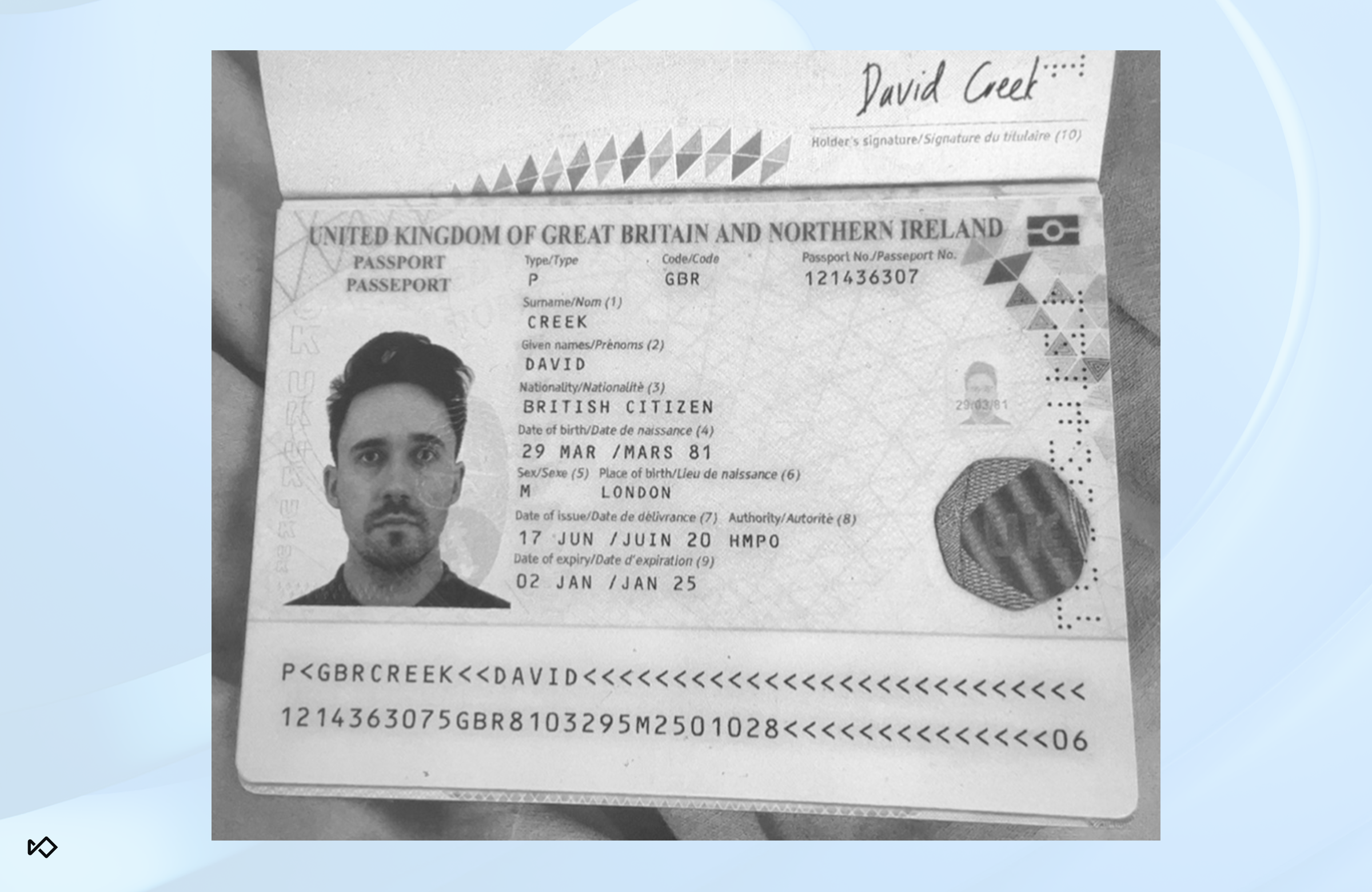

- Doctored media: This includes synthetic or manipulated documents, images and videos, such as government IDs, business registrations, bank statements, invoices, or proof-of-address forms. Fraudsters will often submit these during onboarding or via portals for requesting disputes, refunds, or expense reimbursement. But they can be used for many other types of fraud and scams.

- Screensharing: This is when a deepfake is shown during a screenshare session or embedded in a pre-recorded presentation. Sometimes fraudsters will do this when they’re socially engineering a user, or if they’re unable to inject video directly into a live conference call.

How deepfakes are used for fraud

Deepfakes are being used across a wide range of fraud vectors that affect both fraud and AML teams. You’ll see them in onboarding, logins, payments, and disputes - anywhere identity or trust is verified. Below are a few areas where we've seen fraudsters using deepfake technology.

Identity fraud: Attackers generate full faces or morph photos to create synthetic identities that pass visual checks and populate onboarding forms. These images are paired with fabricated PII and used to open accounts, enroll in services, or stitch multiple fake profiles into a single fraud ring.

Bypassing KYC: Fraudsters feed virtual camera streams, pre-recorded video, or real-time reenactment into selfie liveness checks so the system believes the user is live.

Social engineering scams: Deepfakes provide quick visual “proof” to back up impersonation calls or messages and reduce hesitation from targets. Attackers will keep videos short, add background noise or lag as cover, then push the victim to approve payments or share credentials out of band.

Job applicant fraud: There’s been a recent uptick in the use of synthetic video and headshots to clear remote job interviews. If a fraudster gets hired, they can steal your data and IP, defraud your business and customers, or engage in salary arbitrage by falsely claiming to live in high-cost areas while actually residing somewhere much cheaper.

Disinformation and reputational attacks: Fake endorsements or speeches from public figures are often created to promote scams, like crypto pump-and-dump schemes or fake investment programs. They’re also used to impersonate executives or well-known individuals to manipulate markets, spread false information, or damage a brand’s reputation.

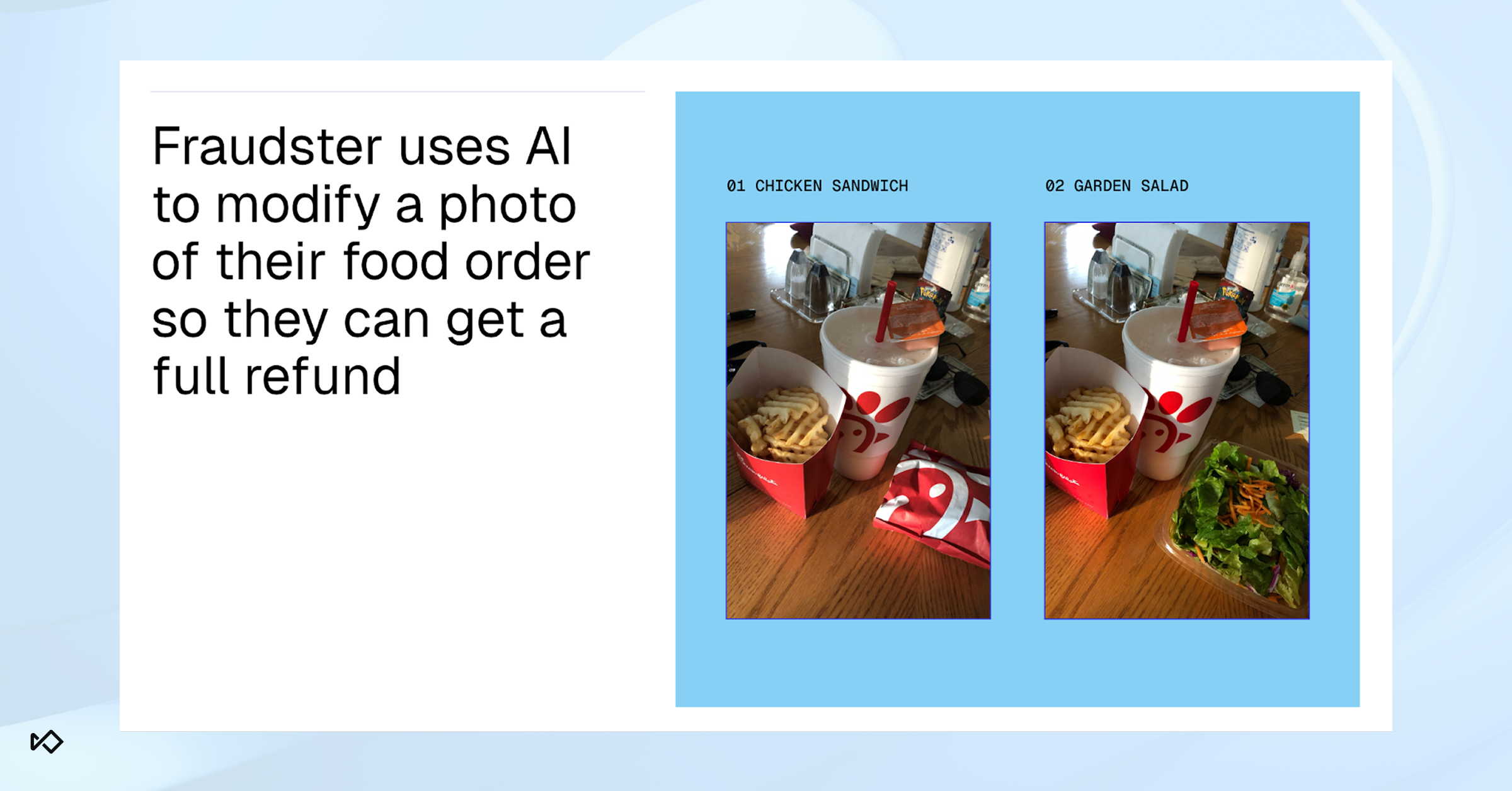

Falsify evidence for claims and disputes: Attackers use deepfakes to create convincing “proof” for chargebacks, refunds, and insurance claims. They’ll edit delivery footage to show a missing or stolen package, stage fake accident videos, or alter receipts and invoices to support a refund request. In some cases, fraudsters will generate fake doorbell videos showing a package being taken, or images of the “wrong item” being delivered to trigger instant reimbursements without human review.

Sextortion / blackmail: These scams usually target younger people and often start on social platforms. Attackers use deepfake tools to generate fake explicit images or videos of their victims and then threaten to share them publicly unless they’re paid or given access to financial accounts. This type of abuse has become a major concern for social platforms and governments worldwide, prompting new laws focused on digital identity protection and bans on explicit deepfake imagery.

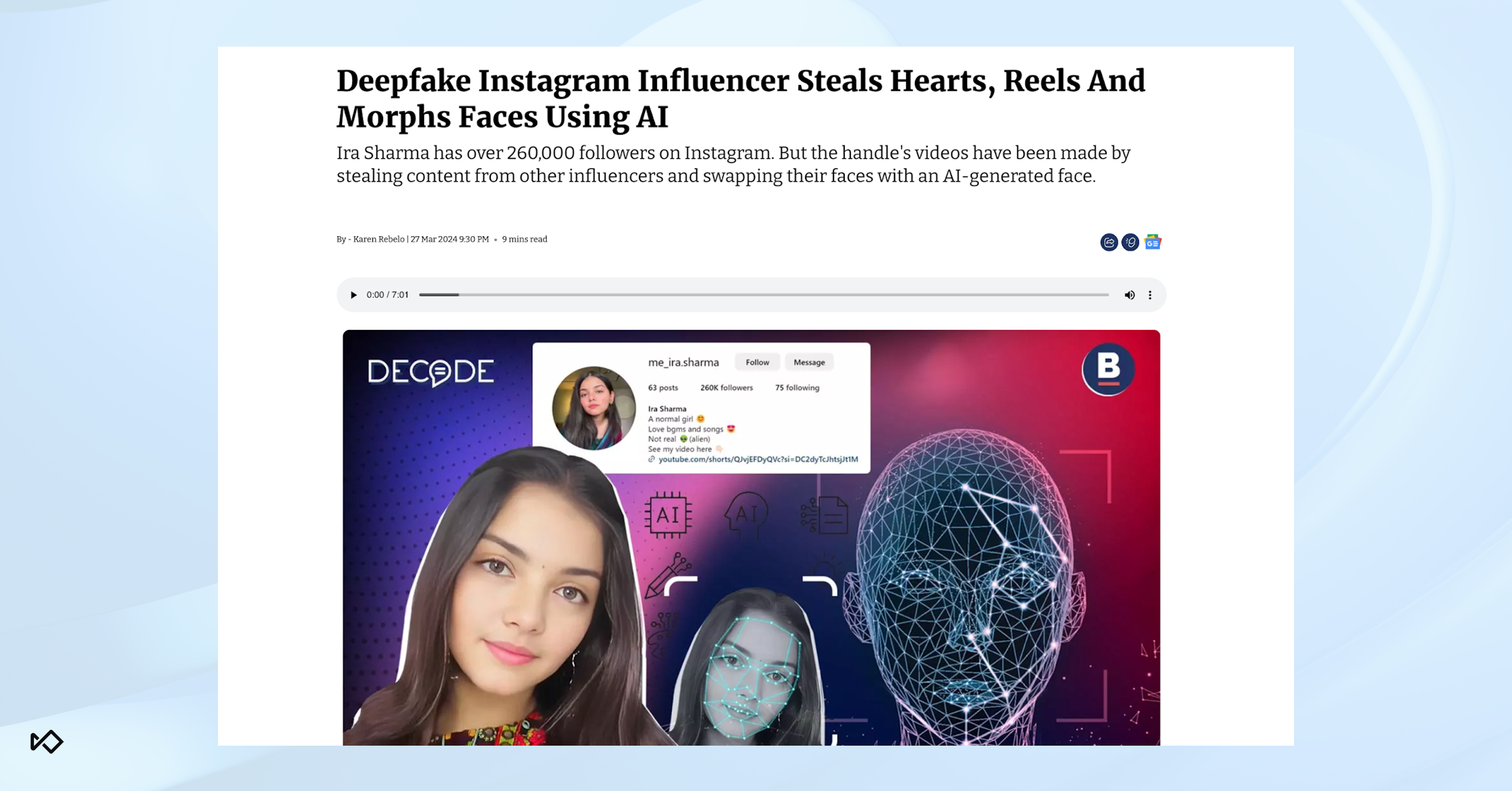

Fake accounts and bot farms: Fraudsters use generated faces and videos to create fake accounts that make scam listings and sellers look legitimate. In ecommerce and marketplaces, this shows up as fake product pages, reviews, and business listings used to boost visibility or collect payments for goods that don’t exist. Some influencers will also use fake followers to charge brands more money for sponsored posts.

Account takeovers: Deepfakes are used to impersonate legitimate users during account recovery or password reset flows that rely on selfie liveness checks. Once verified, they can reset credentials, bypass MFA, and take full control of the account.

Myths about detection

There are a few persistent myths about spotting deepfakes. Here’s what fraud operators actually need to know.

Myth #1: Most deepfakes are laggy

There are now models that can produce deepfakes with basically zero lag time. Typically, the higher the face swap pixel matching, the more lag it causes by requiring higher CPU/GPU bandwidth. But fraudsters are now using lighter off-prem models to produce high-resolution deepfakes without any lag or pixelation.

Myth #2: If it’s laggy, people won’t believe it

Lag just looks like a bad internet connection. In fact, this may even strengthen the believability of a deepfake during scams. Scammers will create stories around it like “I’m in a bad area and have bad reception. I need money for gas. I’m in trouble.” They’ll do a quick call to prove they’re the person, then move the conversation back to text where they’re strong.

Myth #3: Many deepfakes look obvious

Yes, sometimes deepfakes do have weird eyes, bad teeth, or look like poor photoshop. But attackers will just keep interactions short and move to text. They will also use the time of day, lighting, and camera angles to hide these known telltale signs. It’s also important to remember that scammers want to find the most gullible victims. If a victim falls for a bad deepfake, they’re seen as a better target.

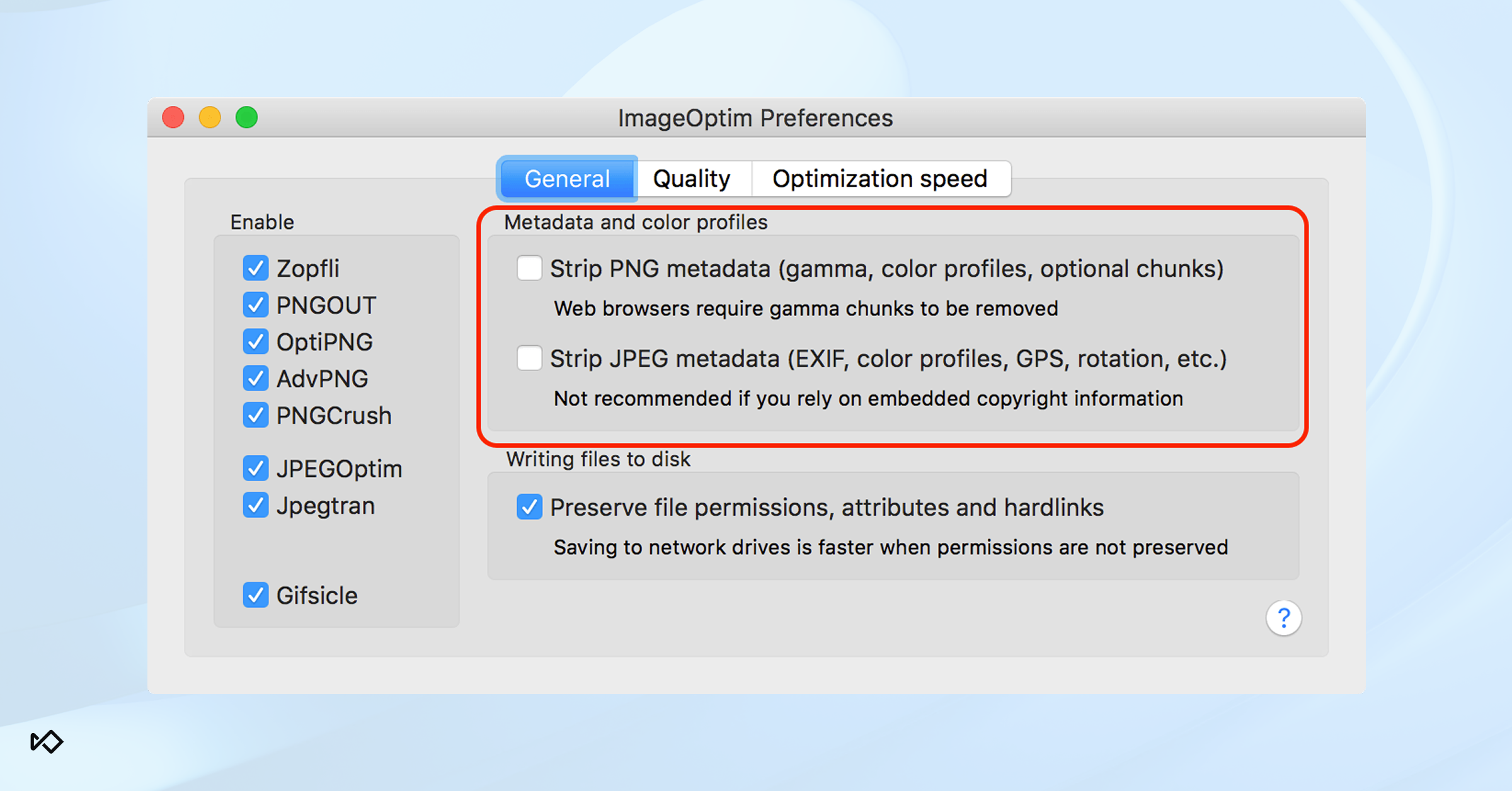

Myth #4: Metadata proves an image is real/fake

Metadata can be edited or stripped with tools like ExifTool or ImageOptim. You can also overwrite it by re-saving or screenshotting images, or re-encoding videos to wipe timestamps. Most social platforms automatically rewrite or remove EXIF data during uploads anyway. While it can sometimes be a helpful clue for detecting fake images, it’s not reliable proof of authenticity.

Myth #5: Liveness checks are foolproof

Liveness checks are only as strong as where, when, and how they’re deployed. They’re common in KYC and enhanced due diligence flows but rarely used in places like job interviews or customer support calls. Even where they are deployed, some systems can still fail on edge cases.

We’ve been able to bypass selfie liveness checks using deepfake tools and virtual cameras. The most effective approach is a layered one that combines video analysis, device intelligence, behavior biometrics, and human reviews.

Best practices for deepfake detection

Deepfake attacks are getting faster, cheaper, and harder to spot. Fraud teams need detection methods that go beyond visual analysis and can adapt as tools evolve. The goal isn’t to catch every fake by eye, but to build layered controls that surface risk signals early and make it harder for synthetic content to pass through your workflows.

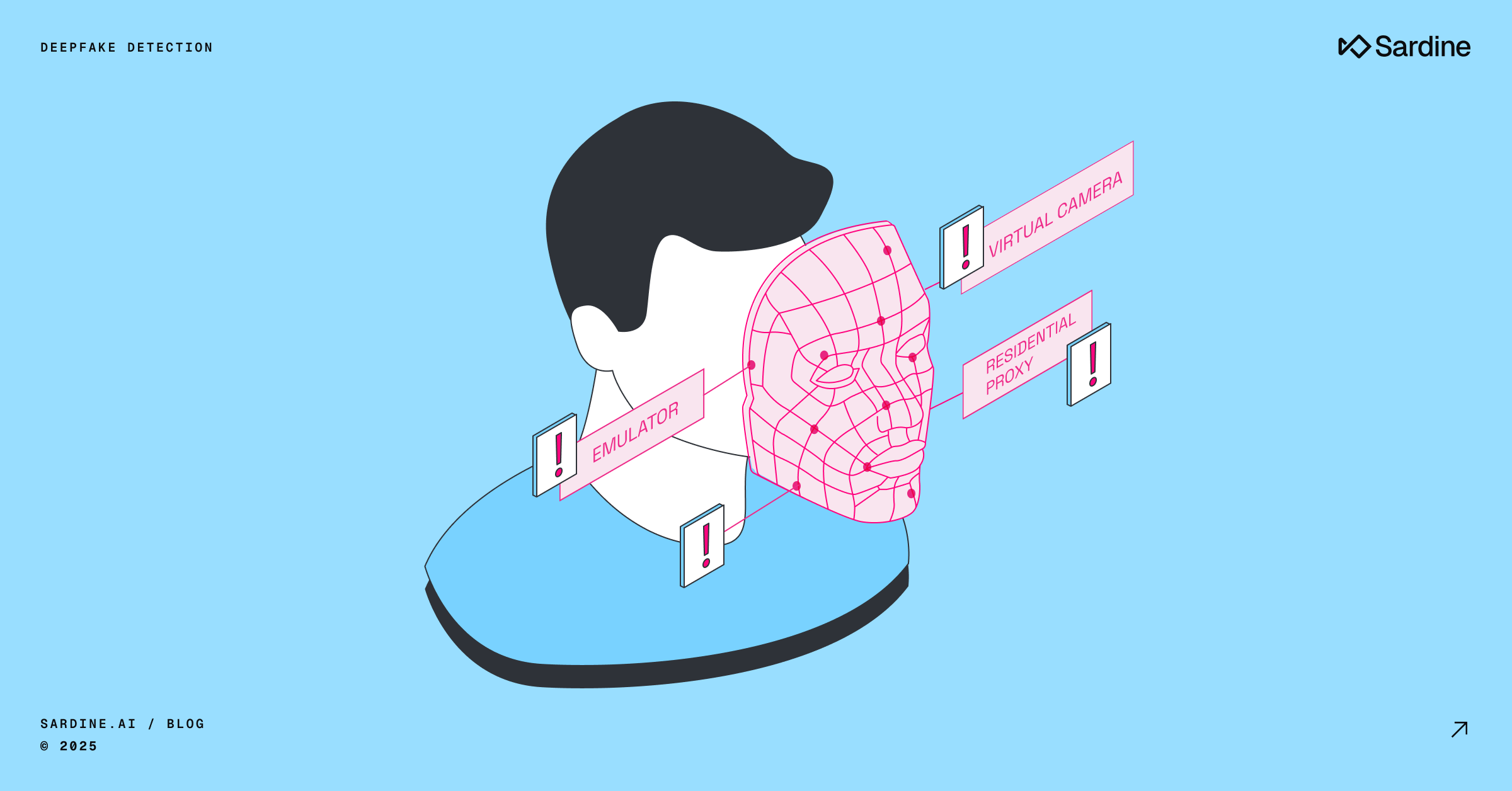

- Look for spoofing tools. Watch for signs of virtual cameras, emulators, or screen-share feeds, especially when paired with VPNs or proxies. These often show up during selfie verification, video onboarding, or any session where you expect a real camera feed.

- Watch for video inconsistencies. Compare what’s happening in the video to the user’s environment. If it’s nighttime in the video but their location indicates daylight, that’s a red flag. In job interviews, ask questions about things locals would know, like traffic patterns, popular restaurants, or nearby neighborhoods.

- Ask for actions that can’t be pre-rendered. Challenge users to do something unpredictable, like turning their head, picking up an object in front of their face, or following a random instruction. Deep fake models are designed to track a human face and movements.

If you interrupt the model's tracking ability (e.g. by covering one side of the face with a hand or a document), the model will struggle to match only half of a face and start leaking the true identity. This works best during live KYC selfie checks, interviews, or conference calls. - Compare images against known reference media. Match the video or image against verified ID photos, previous calls, or known database selfies. This is especially useful for repeat customer reviews, ongoing KYC monitoring, and employee verification where you’ve already captured a baseline image.

- Watch for small behavioral tells. Deepfakes can look perfect frame-by-frame but can sometimes behave unnaturally. Look for odd blinking, stiff posture, or small freezes when the model updates. Also look for partial movements of the face, where one side or section of the face has movement, while the other side or section is “stuck.” These stand out during longer interactions like interviews or live agent escalations.

- Cross-check device and session signals. If the same device ID, browser fingerprint, or IP range shows up across multiple users, you’re likely dealing with coordinated or synthetic activity. This check is critical during onboarding or account creation spikes.

- Check background audio. Deepfake tools often miss subtle sounds like room tone, echo, or keyboard clicks. You’ll catch these inconsistencies during live interviews or support calls where you expect a natural environment.

- Push to a second device. If you suspect someone is using a deep fake model, and they appear to be on a laptop, ask them to switch to their smartphone. These models will not match, and many will not run on many mobile devices.

- Build layered defenses. The best way to detect deepfakes is to use multiple types of checks together. Combine document verification, selfie or live video checks, device intelligence, behavioral biometrics, and human review. Use some of these quietly in the background to keep flows low-friction, and trigger others when risk signals appear. Strategic layering helps you catch synthetic media early without hurting the experience for your users.

Leverage Sardine for deepfake detection

Sardine can help you detect deepfakes during onboarding, step-up verifications, login flows, and live video conferencing. We do this by combining our proprietary device, behavioral signals, and camera detection algorithms with custom rules we’ve built to identify synthetic media and manipulation attempts.

Our own HR team uses this technology to catch fake job applicants attempting to game our interview process.

We’ve also built novel techniques to run risk checks when the deepfake is happening outside your platform. For example, let’s say you’re sending someone a Google Meet link. Even though you can’t embed our SDK on that page, you can send them a custom Sardine URL that quickly collects device and location data before redirecting them to the actual meeting.

If you’re interested in learning more about deepfake detection solutions, contact us to schedule a demo.

Frequently Asked Questions (FAQ)

[faq-section-below]

- What is a virtual camera?

A software-based camera feed that replaces the real webcam output with a pre-recorded or AI-generated video. It’s how most live deepfakes get injected into Zoom, Teams, or browser-based selfie checks. - What is a face swapping tool?

Software that overlays one person’s face onto another’s in real time or during video creation. These tools use face-mapping models to mimic expressions and lighting, making it look like someone else is speaking or on the call. - What regulations have been created to combat deepfake fraud?

Several countries are tightening rules around synthetic media. In the U.S., multiple states have passed laws banning malicious or non-consensual deepfakes, and a 2023 executive order set national goals for watermarking and disclosure.

The new TAKE IT DOWN Act criminalizes sharing non-consensual intimate AI imagery. The EU AI Act requires transparency and labelling of AI-generated content. Denmark is drafting laws giving people ownership rights over their digital likeness. South Korea, the UK, and Australia have also introduced or proposed criminal penalties for harmful deepfake use.

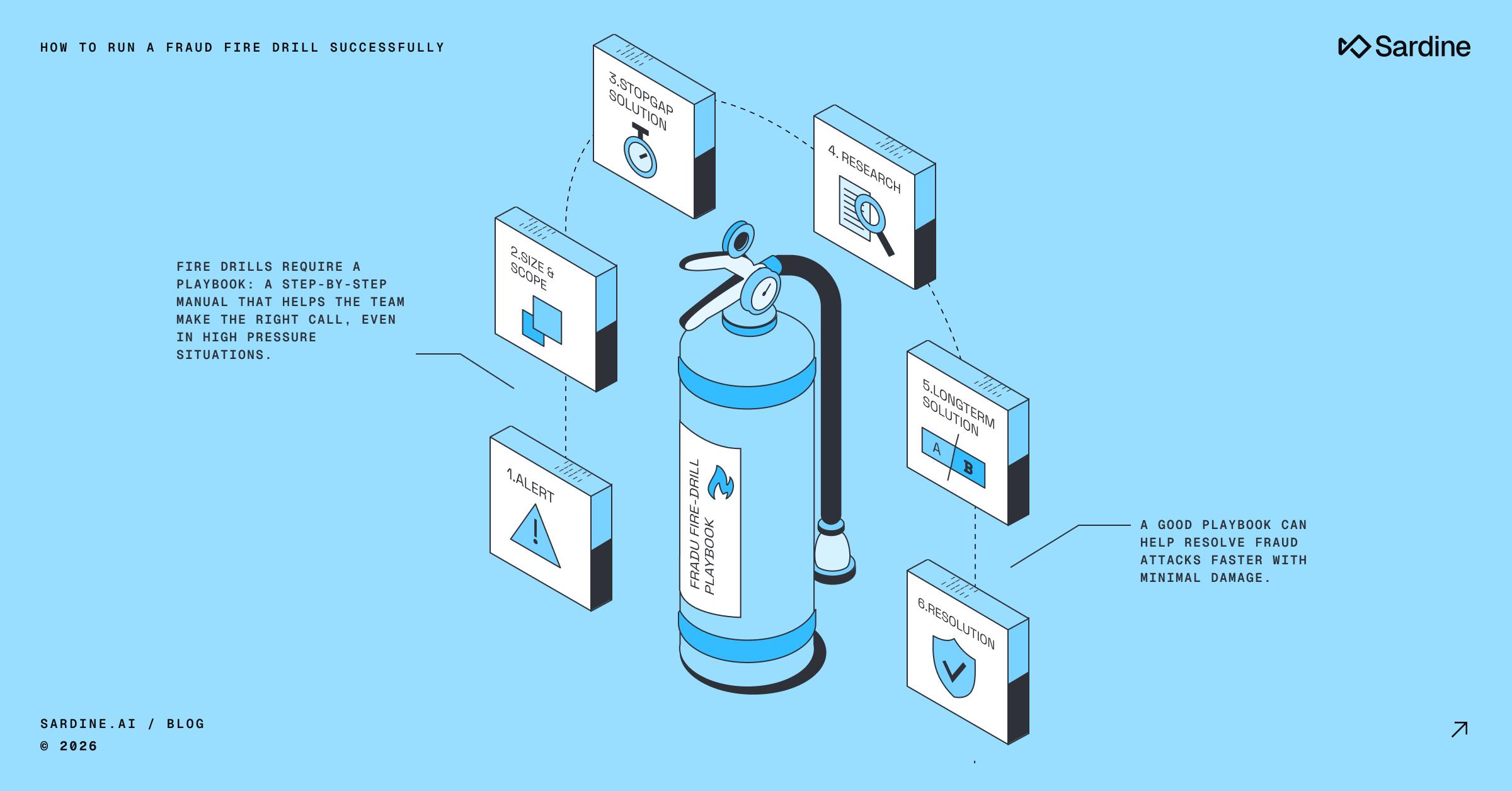

Overall, most regions are moving toward requiring clear disclosure, criminalizing identity misuse, and strengthening digital identity frameworks to prevent deepfake-enabled fraud. - How do you triage a suspected deepfake case quickly?

Start with the strongest technical signals. Check for virtual cameras, emulators, and remote desktop sessions at the device layer.

Then review network data for VPNs, proxies, or data center IPs. Layer in behavioral indicators like missing eye movement, fixed posture, or latency between speech and facial motion.

If multiple signal categories align, route to manual review immediately. You can use objective weighted risk scoring so analysts focus on high-confidence anomalies instead of subjective reviews. - When do you auto-deny, step-up, or route to manual review?

Use rule-based thresholds driven by model confidence.

- High-confidence spoof detections or device matches to known bad clusters should auto-deny.

- Medium-confidence results or partial signal overlap should trigger a step-up, such as active liveness or secondary ID verification.

- Low-confidence but anomalous sessions should be queued for manual review with standardized disposition codes.

Keep thresholds documented and consistent across flows so data can be used for tuning and retraining without introducing noise. - How do you train interviewers or support agents to run live challenges?

Treat live challenges as structured verification, not casual conversation. Build an SOP that defines which prompts to use, how quickly responses should occur, and what signals to log. Agents should understand what “normal” looks like so they can recognize latency, posture freezes, or frame drops without guessing. Record every session the same way so data feeds cleanly into retraining.

Example script:

- “Hey, before we continue, I just need to do a quick verification check. Can you hold four fingers up in front of your face and look toward the camera?”

- “Thanks. Now can you pick up something nearby, maybe your phone or a cup, and hold it for a second so I can confirm motion?”

- “Can you move a little closer to the light or window? I just need to confirm we’re not getting glare on the ID.”

Those simple actions break pre-rendered or injected video streams without tipping off legitimate users. Add internal guidance on expected latency and frame response times so agents know when to escalate or flag for manual review.

%20(1).png)